【Academic Seminar】From Dominant Eigenspace Computation to Orthogonal Constrained Optimization Problems

Title: From Dominant Eigenspace Computation to Orthogonal Constrained Optimization Problems

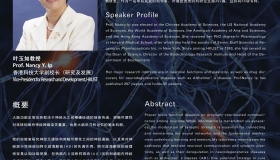

Speaker: Prof. Xin Liu

Time and Date: 4:00-5:00pm, November 19, 2018

Venue:Boardroom, Dao Yuan Building

Biography of Speaker:

Dr. Xin Liu, associate professor and doctoral supervisor of Academy of Mathematics and Systems Science (AMSS), Chinese Academy Sciences (CAS).

He got his bachelor degree from the School of Mathematical Sciences, Peking University in 2004, and PhD from the University of Chinese Academy of Sciences in 2009, under the supervision of Professor Ya-xiang Yuan. He visited Zuse Institute Berlin and Rice University for one year, respectively, and Courant Institute of Mathematical Sciences, New York University for half a year after graduation. His research interests include optimization problems with orthogonality constraints, linear and nonlinear eigenvalue problems, nonlinear least squares and distributed optimization. Dr. Xin Liu is the principal investigator of four NSFC (National Science Foundation of China) grants including the Excellent Youth Grant. He was granted the Jingrun Chen Future Star Program from AMSS in 2014, the Science and Technology Award for Youth from The Operations Research Society of China (ORSC) in 2016, and the Morning Star Award from Beijing Branch of CAS in 2017. He was selected as an associate editor of “Mathematical Programming Computation” in 2015, a council member of ORSC in 2016, an associate editor of “Mathematic Numerica Sinica” in 2017, an associate editor of “Acta Physica Sinica” and the vice president of the Physical Sciences Division of Youth Innovation Promotion Association of CAS in 2018.

Abstract:

Recently, identifying dominant eigenvalues or singular values of a sequence of closely related matrices has become an indispensable algorithmic component for many first-order optimization methods for various convex optimization problems, such as semidefinite programming, low-rank matrix completion, robust principal component analysis,

sparse inverse covariance matrix estimation, nearest correlation matrix estimation, and so on. More often than not,

the computation of the dominant eigenspace forms a major bottleneck in the overall efficiency of solution pr! ocesses. The well-known Krylov-subspace type of methods have a few limitations including lack of scalability. Since the dominant eigenspace computation can be formulated as a special orthogonal constrained optimization problem, we propose a few optimization based approaches which perform robustly and efficiently in a wide range of scenarios. Moreover, we study the general orthogonal constrained optimization problems and propose a new algorithm framework in which either Stiefel manifold or its tangent space related calculations are waived. Numerical performance illustrates the great potential of the new algorithms based on this framework.